Abstract

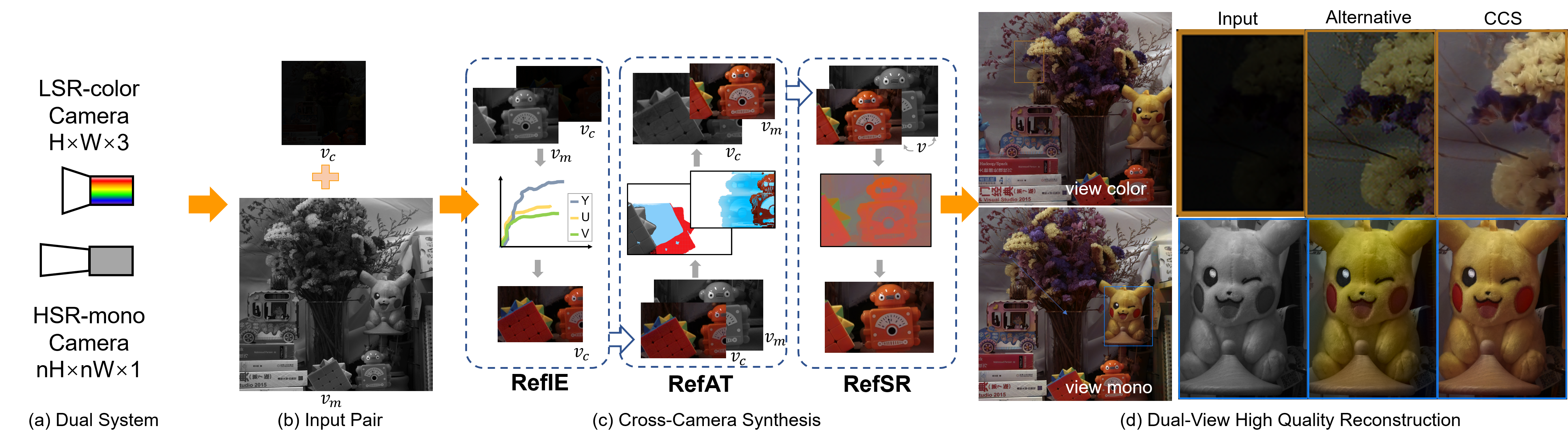

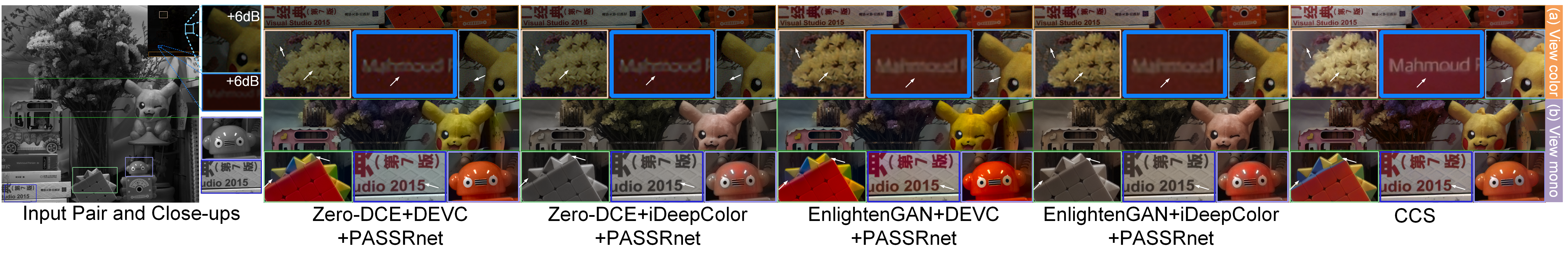

This paper presents a framework for low-light color imaging using a dual camera system that combines a high spatial resolution monochromatic (HSR-mono) image and a low spatial resolution color (LSR-color) image. We propose a cross-camera synthesis (CCS) module to learn and transfer illumination, color, and resolution attributes across paired HSR-mono and LSR-color images to recover brightness- and color-adjusted high spatial resolution color (HSR-color) images at both camera views.

Jointly characterizing various attributes for final synthesis is extremely challenging because of significant domain gaps across cameras. %final representation

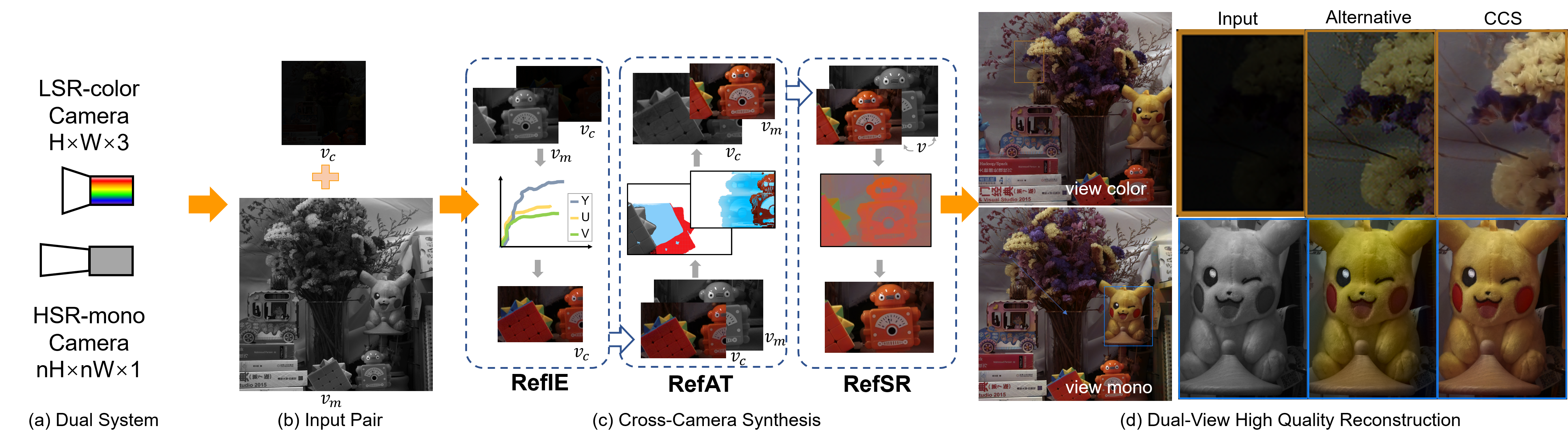

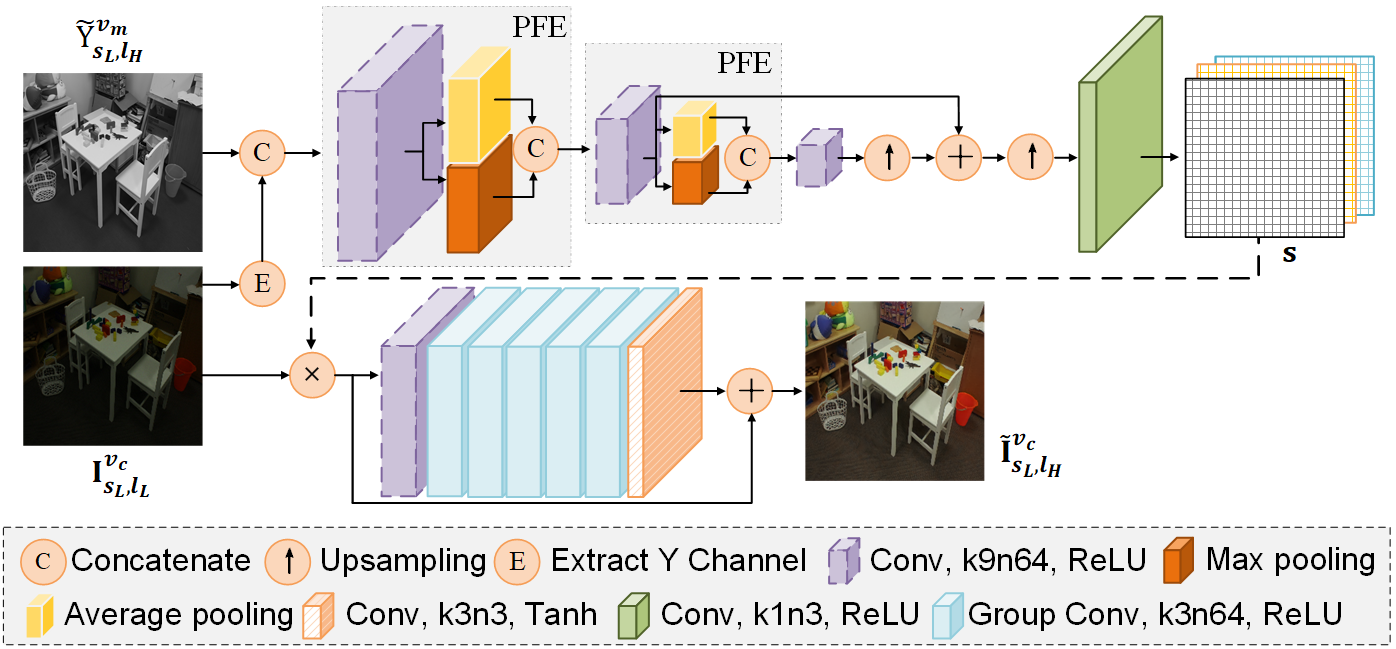

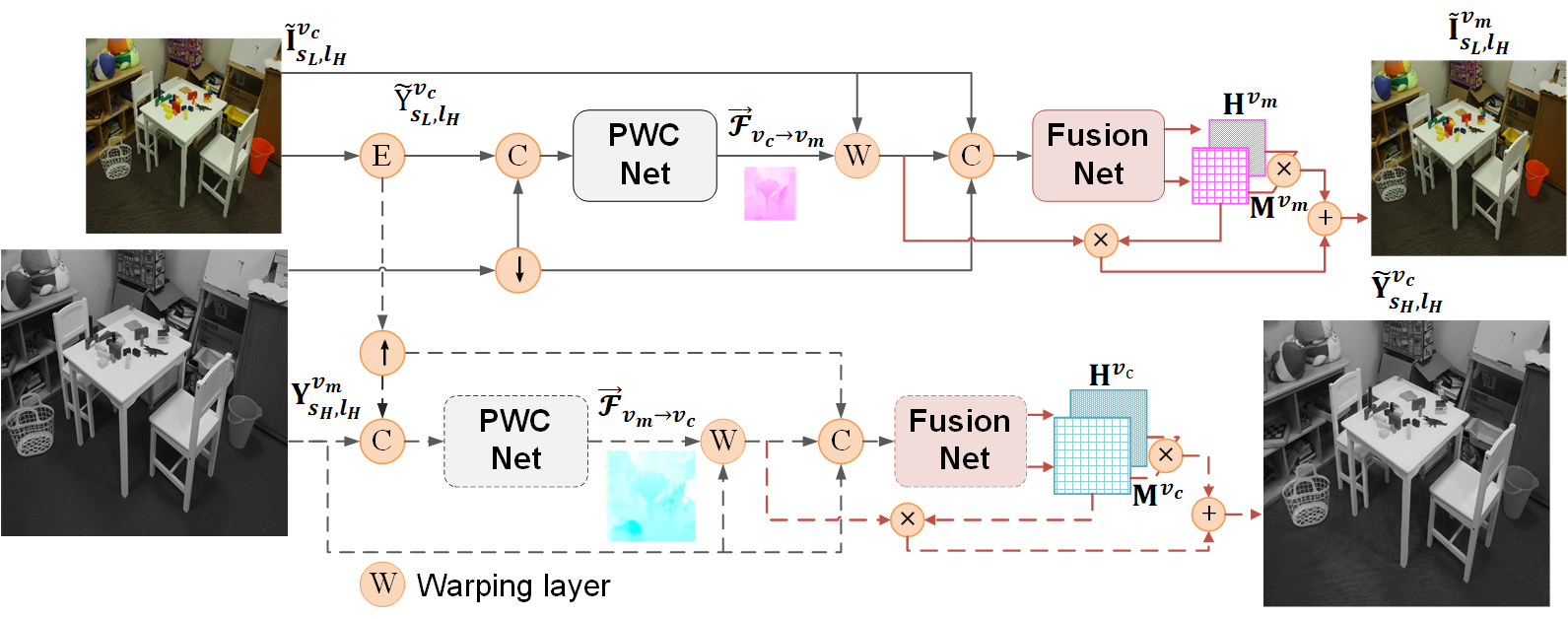

The proposed CCS method consists of three subtasks: reference-based illumination enhancement (RefIE), reference-based appearance transfer (RefAT), and reference-based super resolution (RefSR), by which we can characterize, transfer, and enhance illumination, color, and resolution at both views. Each subtask is implemented using deep neural networks (DNNs) that are first trained for each subtask separately and then fine-tuned jointly.

Experimental results suggest the superior qualitative and quantitative results of the proposed CCS model on both synthetic content from popular datasets and real-captured scenes. Ablation studies further evidence the model generalization to various exposures and camera baselines.